Your Job Shaped Code

How to train for interview questions (and why to enjoy them)

TL;DR:

How to produce interview question solutions that communicate, are modular, do not sacrifice testability under pressure, and still transfer skills to other programs we write?

Understanding how to code, and demonstrating or communicating that understanding in tech interviews, are two different skills.

Although being a proficient coder should improve your ability to pass tech interviews, there are unique problems with interviewing that must be acknowledged and solved to ensure you will go to the next hiring phase.

Like with business writing, tech interviewers want to see the conclusion of your thinking while coding before they see the process leading to it.

Even so, there is significant overlap between programming skills that help plan out your code's structure and readability and skills for communicating well to tech interviewers.

Focusing on these skills will set the foundation for improving your engineering performance while demonstrating them regularly under time pressure will enhance the ability to communicate your solutions and be confident in them.

Through spaced repetition, competitive programming, and applying code design recipes inspired by formal methods and AI, we can become better interview candidates while staying within the line of improving ourselves as programmers and engineers.

Introduction: The Engineer Hoop versus Interview Hoop

It is a common sentiment for those entering the tech industry as software developers that technical interviews do not demonstrate your capacities as an engineer. Veterans of the industry even share this sentiment. James Hague, a game programmer and writer of the venerable Dadgum blog, argued that organizational skills, not "algorithmic wizardry," separate good programmers from bad ones.

The argument against technical interviews which use algorithm questions for their testing goes as follows:

Most large software systems glue libraries and APIs together to provide business value through applications.

Interview questions focus on algorithms which these libraries and APIs already implement, yet candidates must solve these problems within 15-20 minutes.

As a software engineer, my primary concern is ensuring I implement features by chaning libraries and APIs together over several weeks per feature, as efficiently and maintainable as possible, so that I succeed at delivering business value that the application will sustain long-run.

Therefore, interview questions do not test this skill directly, as they use problems I won't encounter as a software engineer or would have more time to solve.

Thus, interview questions can't be a relevant test for my fit for a job.

Beyond this, the argument moves into "what makes a good programmer anyway"?

Do software engineers need to know algorithms to shovel CRUD?

As trends like using ChatGPT to solve coding problems quickly and no-code application development come forward, aren't developers' ideas and organization skills more important?

Isn't the hallmark of an excellent developer the ability to automate mundane coding while sustaining focus on the problems that are important for a business to solve?

Indeed, all of the issues above have inspired trends in hiring where interviewers ask systems design questions next to algorithm questions to pin down and measure the ability of developers to demonstrate higher-level thinking.

Just as "Cracking the Coding Interview" taught developers how to jump through the algorithm question hoop, guides for studying systems design questions have also appeared (including for front-end developers like myself).

But regardless of this trend, algorithm interview questions are still asked by top corporations, even for senior engineering roles. Even with this second hoop, candidates must still leap the first one.

Why ask do interviewers ask algorithms questions in the first place?

To understand why the algorithm question hoop still stands, we must know what tech interviewers get out of asking them. The biggest benefit algorithm questions give: eliminating false positives from the job pool as judiciously as possible.

Interviewers want to eliminate candidates judiciously because there are too many job candidates to test their job fit thoroughly. The reason why false positives matter stems from this popularity.

In any statistical process, increasing the sample size always increases the false positive and true positive rates. Likewise, lowering the false positive rate of a test also reduces the true positive rate, but not disproportionately. As job applications are usually only matched to a single candidate, it is OK to accidentally eliminate candidates for any popular job - these companies can afford it.

Even though algorithm questions appear disconnected from the job, compared to systems design questions and investigating an open-source portfolio, they are quick, legible, and easy to provide to everyone. For jobs with thousands of candidates, this is cheaper and fairer than working on probation. And so the hoop stands.

But these benefits are from the standpoint of a technical interviewer. It can leave prospective job candidates with a sour taste in their mouths. Indeed, the relationship appears one-sided. And so algorithm questions feel like busy work for engineers that could otherwise be a good fit for the job if, by eliminating false positives, one eliminates some true positives along the way.

Why algorithms questions are more relevant than you think.

When software engineers are first learning to code, many start with taking freshman- and sophomore-level courses from a college or boot camp, where:

Basic control flow is taught, usually in a procedural language like C, Javascript or Python (with some exceptions).

The basics of programming abstraction are taught, like classes and function decomposition, design patterns, and common idioms of the teaching language.

Basic algorithms and data structures are taught, as well as different ways of measuring performance, like Big-Oh notation.

Sometimes the second and third steps might be switched or taught concurrently. And once students pass these three hurdles, they usually know enough to build software applications or even "be dangerous." Corporations can hire these students into co-op or internship positions at small-medium-sized software companies or when combined with more personal projects, even large ones.

Software engineers use abstractions and control flow every day. And the algorithms these engineers write most commonly are some for-loop, which drills into our heads the harms of using double-loops to prevent code complexity from blowing up into +O(N^2). But what tends to be retained by graduates is learned in the first two steps, not the third.

So post-graduation, be it from a boot camp or an undergrad, software engineers often lament having to do algorithmic questions again. And if they already have experience, they know what's relevant to everyday work and what's not.

There are conceptual arguments for why you should still know how your APIs are implemented in theory, even if you might not need to know how to write them yourself in practice.

For instance, Joel Spolsky, founder of StackOverflow, coined the term "Leaky Abstractions" to point out situations where libraries fail to capture all the nuance of the behaviours they cover up.

Two programming languages might have a data structure for sets. Still, they could have very different performances for access and deletion, depending on whether they used hash maps or arrays in the background. Here, knowing something about data structures and algorithms,

One could also point to the rule of least power, a heuristic for choosing what languages or libraries to apply to your problem.

For example, do you need to use Kubernetes? Or will it be a foot gun because it makes it harder to solve simple problems? Or is it so flexible of a tool that you solve your problems but with a lot of added complexity and unnecessary coupling between your solution's parts?

Innovative software projects involve creating new technologies which others use as APIs or platforms that will apply knowledge of algorithms and data structures to some degree. For instance, Kubernetes, developed by Google, is written almost entirely in Golang, also designed by Google. Golang is written in C++!

But these aren't the arguments I'm making here. And I am not arguing against using abstractions, libraries, or Kubernetes, as every tool has its place.

Instead, I believe solving more minor problems quickly and with better quality adds up to better-quality code in the future.

If staff engineer roles are about taking on more responsibility for larger codebases and making impactful decisions - you will hope they have solved the minor problems first! Especially when considering what staff engineers must do.

Yes, staff engineers mainly focus on ensuring that as systems grow, they continue to align with business value. And they do this while keeping costs down. But they must also provide mentorship to more junior engineers and be able to make quick work tasks blocking their engineering teams. In this way, they have to be good at problems that they would have learned to solve earlier in their careers.

But beyond that, aren't these skills that one can learn on the job anyway? Why do we need to consider including algorithm questions inside of our set of past problems?

Excellent Engineers practice to break the Iron Triangle

The project management triangle, or "Iron Triangle" (so called because it is considered an inescapable aspect of every project), is a model which says that in any project, you can only optimize for two out of the following:

Furthermore, in any business, there is a notion of what parts of the organization are considered a cost center and what parts are a profit center.

Engineers, generally, are a cost center. Although engineers are necessary to produce features and functionality, more is needed to justify their cost. Engineers are often not directly involved with turning features and functionality into profit.

In "Don't Call Yourself a Programmer," Patrick McKenzie emphasizes this point, emphasizing that wealthy engineers focus on their financial sense rather than the technologies they specialize in. Likewise, James Hague repeatedly emphasizes that programmers who get too serious about their technology lose sight of why we program in the first place.

There are two ways in which engineers can avoid becoming a cost center. The first way is to solve the right problem, which means getting more value for the exact cost of solving a wrong one. Being able to use technology is different than solving the right problem. And when you do use technology, it’s crucial that it solves more problems than it creates, creating leverage to increase quality and lower cost.

In interviews, interviewers check your ability to solve the right problem with something other than algorithm questions: they look at your past projects. But the second way to avoid becoming a cost center is tested by algorithm questions. The second way is, for whatever problem you do solve, solve it efficiently.

Why are interview questions contrary to excellent engineering?

When it comes to technical interview questions, there are four properties that your solution to the question must demonstrate for an interviewer to enjoy:

Communicability. As the interviewer is, in part, testing your thought process, you must be able to explain your decisions as you write code, either through your comments or talking out loud (mainly talking out loud).

Rehearsability. Your algorithm must demonstrate that you can take advantage of patterns and prior knowledge in a way appropriate for the problem.

(Semi-)Verifiability. Your algorithm covers most of the test cases and some of the most critical corner cases with the 80/20 rule.

Speed. Your code must exemplify these criteria within 15-20 minutes.

Finally, candidates must do this all with confidence under pressure. This confidence will come partly from having the experience of solving interview questions while ensuring that you, to the best of your ability and up to the standards of hiring managers, are solving these questions well while communicating, rehearsing, being correct, and fast.

Looking at these four properties and accepting that solving more minor problems is necessary before solving bigger ones, we can still see where the "irrelevance" of interview questions comes from. It comes from the fact that these questions must be solved fast while still being testably good.

In other words, in terms of the Iron Triangle, you are fixing time while trying to maximize quality and minimize the costs of your searching for the correct code. But as we set the value for time, quality will suffer. So, in the end, while it's true that interviewers are OK with some slack on how well the code is verified to be correct, interviewees can find the stress of writing good code under pressure artificial. The time pressure leads to stress which interferes with the ability to think of test cases, resentment for the process not giving the candidate enough time to think, or both.

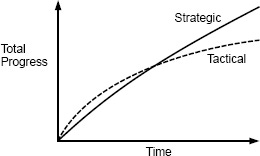

Here, interview questions stop resembling sound engineering and look like lousy engineering. In "A Philosophy of Software Design" (online version here), John Ousterhout, creator of the Tcl/Tk programming environment, points out the difference between tactical and strategic programming.

Tactical programmers deliver value quickly but at the expense of creating complexity which affects the ability of developers to increase their productivity later.

This model applies to companies as well as individuals. Ousterhout identifies Facebook as a tactical company – after all, its motto was "move fast and break things." This approach resulted in large amounts of technical debt, which they had to reckon with later.

But contrary to Facebook, Ousterhoust points to Google and VMWare as strategic companies. Google and VMWare invested in the design of their code. When the code is well-designed, it becomes easier to reuse while not incurring any more technical debt. This behaviour is abstraction done right.

In the end, all companies want to be strategic. If two companies solve the right problem, one of them will be able to profit more in the long run because their engineers will not spend as much time on technical debt in the future.

Thus, it's possible for two engineering teams to both work on the right problem, but with one creating "wrong problems" to solve later on due to "unstrategic" code.

Ultimately, wanton complexity takes engineers away from being profit centers and brings them into cost centers. Any well-designed or well-used library starts from programming thoughtful and organized interfaces for your code.

And yet these aren't the conditions that interview questions facilitate. So how can these questions test that you are a excellent engineer?

Why are interview questions aligned with excellent engineering?

So while the last two properties of answers to algorithm questions – speed and semi-correctness – don't feel aligned with the job of entry- or mid-level software engineering, the first two properties do.

Communicability and Rehearsability are relevant to engineering interviews and applicable to all software engineering throughout a developer's career.

Interviewers only have so much time to test you, leading to sacrifices in your ability to produce verifiable code while also being quick. However, what they are looking for is that you could write good code if given the time to do so.

This point is essential. It is the keystone that bridges interview questions and regular software development together.

It also means there is a degree of freedom for candidates to solve algorithm problems to avoid having to solve them completely. However, it also introduces the opportunity to make mistakes that otherwise will only exist during the interview process.

In "Principles," hedge fund manager Ray Dalio provides examples of what he looks for in people communicating during meetings. Good communicators, he argues, "understand above the line and below the line thinking" and can go between both:

Notably, this model for good communication also resembles doing top-down functional decomposition for programs:

For interviews, communication becomes difficult as tough questions contain unknowns. With novel, difficult interview questions, candidates will likely explore many solutions, including false paths or freeze ups under pressure.

But there is a way to square the circle. Rather than exploring solutions from details, one could imagine solving these problems top-down. And there are known programming methods which do this:

Designing from a wishlist. For instance, the SPROUT method.

Design from examples, tests and types first. Design Recipes for programs.

Explain the code in the comments as you write it. Literate Programming.

Fill in your algorithm's structure. Refining algorithms from invariants.

These methods put the stuff necessary to interviewers up front. They show function decomposition, desired behaviour, examples, comments, and tests. Candidates address these issues first so the interviewer knows where they are going, even if they don't finish solving the problem. They are good programming practices outside of interviews too!

But if you code in a way with no structure, even if you end up at the solution, it will be challenging to communicate your thinking in a way that interviewers can follow.

Still, there are tradeoffs:

Programmers are only sometimes taught to write code or think this way. Some programmers start perfecting small details before adding additional complexity, akin to the Unix philosophy. This way is not invalid. But it fails to put the most important things to communicate first, requiring a switch in programming style.

The interview code must still run to be verified. But interleaving coding with outlining takes practice. The wrong decomposition also can lock one into a bad design too fast.

But considering the importance of Communicability and Rehearsability, learning how to write algorithms top-down will help interviewers understand your thought process while solving an algorithms question. As it's the most transparent way to design programs, it is also an approach worth learning for all engineering.

Conclusion: a model for programming practice

With speed and semi-verifiability being the two ways that interview questions stop resembling excellent engineering, they start reaching another tech subculture: competitive programming.

On average, competitive programmers must solve 10-20 algorithm problems within 2-3 hours. That is ten minutes a question, making the interview timing of 15-20 minutes appear luxurious.

Instead of persuading you of the value of algorithm questions, this comparison might make your view of these questions more dismal: if engineers are a cost center because they cut corners. Even veteran programmers like James Hague or Patrick McKenzie argue that you should focus on something other than code or calling yourself a programmer; then how could competitive programming be relevant?

But on the other hand, doing these questions well might be crucial for the same reason that tech interviewers find them essential. They provide an objective, bite-sized way of practicing your programming skills.

Although personal software projects are also helpful for practicing programming and should be the primary way you do so, such projects are typically not bite-sized.

Choosing what project to work on and scoping it can also be tricky and demands different skills than programming. It is also possible to have a significant project but could have written better code. Or have a great project and code base, but be a poor communicator, so not be hired. One example would be Max Howell, the creator of the Homebrew package manager used by millions, who explains why he failed to pass Google interviews and why Google didn't hire him.

But where competitive programmers sacrifice code quality even further, creating the reputation of competitive programmers being bad hires due to a preference for terse naming and unreadable one-liners, this doesn't have to hold for when we practice interview questions.

We can instead use the adage, "slow is smooth, smooth is fast." And we can practice programming methods aligned with excellent engineering, hoping to become faster at them, so all our code becomes communicable, rehearsable, and verifiable - quickly.

These observations give us our problem statement:

How to produce interview question solutions that communicate, are modular, do not sacrifice testability under pressure, and still transfer skills to other programs we write?

By combining the following skills and rehearsing them until they become second nature:

Communicability

Design Recipes (from HtDP, DCIC, and more)

SPROUT Method/Top-Down Design

Literate Programming

Rehearsability

Black-Box Study of Data Structures and Algorithms

Focusing on the relationships between data structures

Focusing on patterns of problem-solving found everywhere, like recursion

Design Patterns and Programming Idioms

(Semi-)Verifiability

Thinking about thinking about examples

Learning debugging strategies to anticipate bugs

Combined with design recipes, use examples to motivate tests and vice versa

Speed

Scoring oneself based on a time limit, then diagnosing why one went overtime after solving the question

Competitive Programming

Spaced Repetition

I will explain the model further and my experience with testing it in future posts.

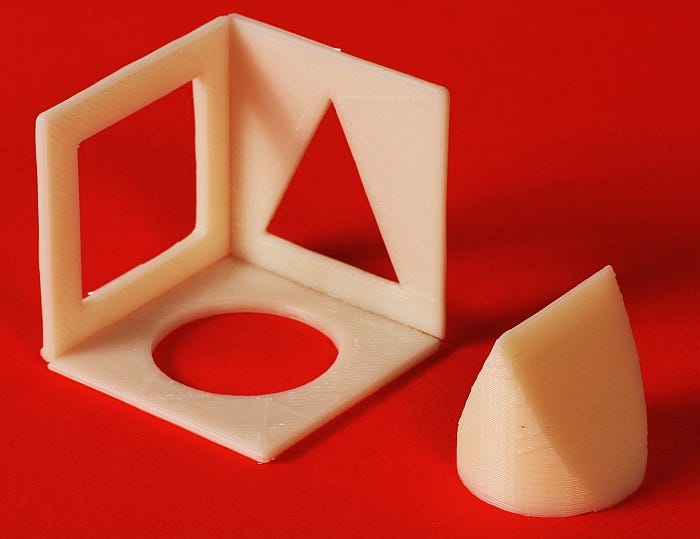

Fitting the square peg into the round hole

Interview questions are a necessary evil for tech interviewers. Because interviewers provide such questions to rule out false positives in the hiring process, there must be a disconnect between what these questions "actually" measure versus how the results would reflect on the possible job performance of a hire.

However, these questions still allow developers to practice good coding habits that produce communicable, rehearsable, verifiable code.

These habits, if made automatic, make developers more efficient programmers who can consistently articulate the decisions they make while coding and deeply understand how the disparate parts of software come together to produce sophisticated applications.

Furthermore, once mastered and refined, these skills are essential both in the short run for delivering code and in the long run for sustaining code quality. They set the foundations for making higher-level decisions about code. They complement the development of a better taste for software engineers, as, through practice, they will have a robust conceptual model of all the systems they build in the future.

If all the above is true, then interview questions are not something to resent but are welcome. Here is a bite-sized way that software engineers rehearse the "finger-feel" of designing code, improving their quality throughput while still meeting deadlines, and increasing their likelihood of getting a job.

The Plan

Next post, there will be a rubric devised to help practice interview questions in a way which facilitates better coding by using design recipes and studying techniques to ensure that your answers to interview questions communicate well. Furthermore, avoiding pitfalls common to candidates who fail to pass tech interviews and focusing on writing better solutions by reviewing previous questions on a schedule.

Then there will be posts showing my rubric on the first 50 easy questions of LeetCode, ordered by lowest pass rate to highest, to see if this model develops my ability to answer interview questions verifiably and with speed.

Finally, I will journal my application process and do postmortems of my algorithm interviews. Maybe I'm wrong! Here's to hoping I'm right.